NIS - NFS - BIND

yum install ypserv / apt install ypserv

yum install rpcbind /apt install rpcbidn

ypdomainname nis-server / apt install nis-server

# cat /etc/sysconfig/network

# Created by cloud-init on instance boot automatically, do not edit.

#

NETWORKING=yes

NISDOMAIN=nis-server

# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

172.16.0.12 nis-server

172.16.0.14 nis-client

systemctl start rpcbind ypserv ypxfrd yppasswdd

systemctl enable rpcbind ypserv ypxfrd yppasswdd

/usr/lib64/yp/ypinit -m (for ubunto /usr/lib/yp/ypinit -m)

useradd -g 1024 -u 1024 testuser01

yum install nfs-utils

# cat /etc/exports

/home 172.16.0.0/28(rw,no_root_squash)

[root@ip-172-16-0-12 ~]# showmount -e

Export list for ip-172-16-0-12.ec2.internal:

/home 172.16.0.0/28

firewall-cmd --add-service=nfs --permanent

firwall-cmd --add-service{nfs3,mountd,rpc-bind} --permanent

firewall-cmd --reload

systemctl start rpcind nfs-server

systemctl enable rpcbind nfs-server

NIS and NFS Client

[root@nis-client ~]# yum install ypbind rpcbind nfs-utils

[root@nis-client ~]# ypdomainname nis-server

[root@nis-client ~]# echo "172.16.0.12 nis-server" >> /etc/sysconfig/network

[root@nis-client ~]# echo "172.16.0.12 nis-server" >> /etc/hosts

[root@nis-client ~]# echo "172.16.0.9 nis-client" >> /etc/hosts

[root@nis-client ~]# authconfig --enablenis --nisdomain=nis-server --nisserver=nis-server --enablemkhomedir --update

[root@nis-client ~]# systemctl start rpcbind ypbind

[root@nis-client ~]# systemctl enable rpcbind ypbind

ypwhich

[root@nis-client /]# mount nis-server:/home /home

[root@nis-client /]# vi /etc/fstab

reference: https://www.server-world.info/en

Automount using autofs

root@debian01:/photos# apt-get install autofs

root@debian01:~# showmount -e server1

Export list for server1:

/movies 172.18.14.0/24

/photos 172.18.14.0/24

/users 172.18.14.0/24,192.168.10.0/24

mkdir /nfs

vim /etc/auto.master

/nfs /etc/auto.photos

vim /etc/auto.photos

phtos server1:/photos

movies server1:/movies

service autofs start

root@debian01:~# df -h

Filesystem Size Used Avail Use% Mounted on

rootfs 4.5G 3.7G 596M 87% /

udev 10M 0 10M 0% /dev

tmpfs 208M 604K 207M 1% /run

/dev/mapper/debian01-root 4.5G 3.7G 596M 87% /

tmpfs 5.0M 0 5.0M 0% /run/lock

tmpfs 415M 224K 415M 1% /run/shm

/dev/sda1 228M 32M 185M 15% /boot

/dev/sr0 1.1G 1.1G 0 100% /media/cdrom0

root@debian01:~# ls /nfs

movies photos

root@debian01:~# ls /nfs/movies/

movie1.mpeg movie2.mpeg

root@debian01:~# ls /nfs/photos

photo1.jpg photo2.jpg

root@debian01:~# df -h

Filesystem Size Used Avail Use% Mounted on

rootfs 4.5G 3.7G 596M 87% /

udev 10M 0 10M 0% /dev

tmpfs 208M 604K 207M 1% /run

/dev/mapper/debian01-root 4.5G 3.7G 596M 87% /

tmpfs 5.0M 0 5.0M 0% /run/lock

tmpfs 415M 224K 415M 1% /run/shm

/dev/sda1 228M 32M 185M 15% /boot

/dev/sr0 1.1G 1.1G 0 100% /media/cdrom0

server1:/movies 3.5G 1.4G 2.2G 39% /nfs/movies

server1:/photos 3.5G 1.4G 2.2G 39% /nfs/photos

root@debian01:~#

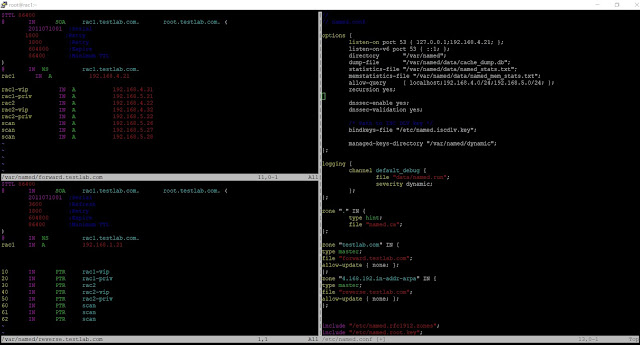

BIND

yum install bind

[root@rac1 ~]# cat /etc/named.conf

//

// named.conf

//

// Provided by Red Hat bind package to configure the ISC BIND named(8) DNS

// server as a caching only nameserver (as a localhost DNS resolver only).

//

// See /usr/share/doc/bind*/sample/ for example named configuration files.

//

options {

listen-on port 53 { 127.0.0.1;192.168.4.21; };

listen-on-v6 port 53 { ::1; };

directory "/var/named";

dump-file "/var/named/data/cache_dump.db";

statistics-file "/var/named/data/named_stats.txt";

memstatistics-file "/var/named/data/named_mem_stats.txt";

allow-query { localhost;192.168.4.0/24;192.168.5.0/24; };

recursion yes;

dnssec-enable yes;

dnssec-validation yes;

/* Path to ISC DLV key */

bindkeys-file "/etc/named.iscdlv.key";

managed-keys-directory "/var/named/dynamic";

};

logging {

channel default_debug {

file "data/named.run";

severity dynamic;

};

};

zone "." IN {

type hint;

file "named.ca";

};

zone "testlab.com" IN {

type master;

file "forward.testlab.com";

allow-update { none; };

};

zone "4.168.192.in-addr-arpa" IN {

type master;

file "reverse.testlab.com";

allow-update { none; };

};

include "/etc/named.rfc1912.zones";

include "/etc/named.root.key";

[root@rac1 ~]# cat /var/named/forward.testlab.com

$TTL 86400

@ IN SOA rac1.testlab.com. root.testlab.com. (

2011071001 ;Serial

1800 ;Retry

1800 ;Retry

604800 ;Expire

86400 ;Minimum TTL

)

@ IN NS rac1.testlab.com.

@ IN A 192.168.4.21

rac1-vip IN A 192.168.4.31

rac1-priv IN A 192.168.5.21

rac2 IN A 192.168.4.22

rac2-vip IN A 192.168.4.32

rac2-priv IN A 192.168.5.22

scan IN A 192.168.5.26

scan IN A 192.168.5.27

scan IN A 192.168.5.28

rac1 IN A 192.169.4.21

[root@rac1 ~]# cat /var/named/reverse.testlab.com

$TTL 86400

@ IN SOA rac1.testlab.com. root.testlab.com. (

2011071001 ;Serial

3600 ;Refresh

1800 ;Retry

604800 ;Expire

86400 ;Minimum TTL

)

@ IN NS rac1.testlab.com.

@ IN A 192.168.1.21

rac1 IN A 192.168.1.21

10 IN PTR rac1-vip

20 IN PTR rac1-priv

30 IN PTR rac2

40 IN PTR rac2-vip

50 IN PTR rac2-priv

60 IN PTR scan

61 IN PTR scan

62 IN PTR scan

[root@serv1

[root@serv1 named]# cat /etc/sysconfig/network-scripts/ifcfg-eth0

TYPE="Ethernet"

PROXY_METHOD="none"

BROWSER_ONLY="no"

BOOTPROTO="dhcp"

DEFROUTE="yes"

IPV4_FAILURE_FATAL="no"

IPV6INIT="yes"

IPV6_AUTOCONF="yes"

IPV6_DEFROUTE="yes"

IPV6_FAILURE_FATAL="no"

IPV6_ADDR_GEN_MODE="stable-privacy"

NAME="eth0"

UUID="bab7d116-b33a-43ff-b27e-bf2a1bd1dce4"

DEVICE="eth0"

ONBOOT="yes"

IPV6_PRIVACY="no"

# this is required to stop changing /etc/resolve.conf using dhcp-client script

PEERDNS=no

[root@serv1 named]# cat /etc/resolv.conf

; generated by /usr/sbin/dhclient-script

search testlab.com mshome.net

nameserver 192.168.1.10

nameserver 172.18.14.4

------ Troubleshooting

named-checkconf /etc/named.conf

named-checkconf -z /etc/named.conf

named-checkzone testlab.com /var/named/forward.testlab.com

named-checkszone testlab.com /var/named/reverse.testlab.com

service named restart

systemctl restart named

-------------------

[root@server1 ~]# named-checkconf -z

zone logic.com/IN: loaded serial 2011071001

zone 10.10.168.192.in.addr-arpa/IN: loaded serial 2011071001

zone logic1.com/IN: loaded serial 2011071001

zone 11.10.168.192.in.addr-arpa/IN: loaded serial 2011071001

zone logic20.com/IN: loaded serial 2011071001

zone 12.11.168.192.in.addr-arpa/IN: loaded serial 2011071001

zone logic21.com/IN: loaded serial 2011071001

zone logic22.com/IN: loaded serial 2011071001

zone localhost.localdomain/IN: loaded serial 0

zone localhost/IN: loaded serial 0

zone 1.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.ip6.arpa/IN: loaded serial 0

zone 1.0.0.127.in-addr.arpa/IN: loaded serial 0

zone 0.in-addr.arpa/IN: loaded serial 0

[root@client2 conf.d]# httpd -S

VirtualHost configuration:

*:80 is a NameVirtualHost

default server client2.logic20.com (/etc/httpd/conf.d/logic20.conf:1)

port 80 namevhost client2.logic20.com (/etc/httpd/conf.d/logic20.conf:1)

alias logic20.com

port 80 namevhost client2.logic21.com (/etc/httpd/conf.d/logic21.conf:1)

alias logic21.com

port 80 namevhost client2.logic22.com (/etc/httpd/conf.d/logic22.conf:1)

alias logic22.com

ServerRoot: "/etc/httpd"

Main DocumentRoot: "/var/www/html"

Main ErrorLog: "/etc/httpd/logs/error_log"

Mutex authn-socache: using_defaults

Mutex default: dir="/run/httpd/" mechanism=default

Mutex mpm-accept: using_defaults

Mutex authdigest-opaque: using_defaults

Mutex proxy-balancer-shm: using_defaults

Mutex rewrite-map: using_defaults

Mutex authdigest-client: using_defaults

Mutex proxy: using_defaults

PidFile: "/run/httpd/httpd.pid"

Define: _RH_HAS_HTTPPROTOCOLOPTIONS

Define: DUMP_VHOSTS

Define: DUMP_RUN_CFG

User: name="apache" id=48

Group: name="apache" id=48

elinks logic.com -- on server1

elinks loigc1.com -- on client1

elinks loigc20.com -- on client2

elinks loigc21.com -- on client2

elinks logic22.com -- on client2

Router

Client

ifconfig enp0s8 10.0.0.1 netmask 255.255.255.0

sudo route add default gw 10.0.0.254

/etc/resolv.conf

nameserver 8.8.8.8

Server

ifconfig enp0s8 10.0.0.254 255.255.255.0

iptables -L -r

--enable masquerading

sudo iptables --table nat --append POSTROUTING --out-nterface enp0s3 -j MASQUERADE

--enable ipforwarding

sudo iptables --append FORWAARD --in-interface enp0s8 -j ACCEPT

--eanble ip forwarding on sysctl system

sudo sysctl -w net.ipv4.ip_forward=1

iptables-save

sudo sh -c "iptables-save > /etc/iptables.rules"

iptables-restore < /etc/iptables.rules

for firewalld

# firewall-cmd --direct --permanent --add-rule ipv4 nat POSTROUTING 0 -o eth0 -j MASQUERADE

# firewall-cmd --direct --permanent --add-rule ipv4 filter FORWARD 0 -i eth1 -o eth0 -j ACCEPT

# firewall-cmd --direct --permanent --add-rule ipv4 filter FORWARD 0 -i eth1 -o eth0 -m state --state RELATED,ESTABLISHED -j ACCEPT

# firewall-cmd --reload

[root@server1 ~]# firewall-cmd --permanent --add-service http

success

[root@server1 ~]# firewall-cmd --permanent --add-service dns

success

[root@server1 ~]# firewall-cmd --permanent --add-service nfs

eth0 connected to internet for out traffic

eth1 connected to internal network incoming traffic form internal network

2: eth0: mtu 1500 qdisc mq state UP group default qlen 1000

link/ether 00:15:5d:54:4e:3b brd ff:ff:ff:ff:ff:ff

inet 172.18.14.51/28 brd 172.18.14.63 scope global noprefixroute eth0

valid_lft forever preferred_lft forever

inet6 fe80::215:5dff:fe54:4e3b/64 scope link

valid_lft forever preferred_lft forever

3: eth1: mtu 1500 qdisc mq state UP group default qlen 1000

link/ether 00:15:5d:54:4e:3c brd ff:ff:ff:ff:ff:ff

inet 192.168.10.10/24 brd 192.168.10.255 scope global noprefixroute eth1

valid_lft forever preferred_lft forever

inet6 fe80::215:5dff:fe54:4e3c/64 scope link

valid_lft forever preferred_lft forever

[root@server1 ~]# ip rout show

default via 172.18.14.49 dev eth0 proto static metric 100

default via 172.18.14.51 dev eth1 proto static metric 101

169.254.0.0/16 dev eth1 scope link metric 1003

172.18.14.48/28 dev eth0 proto kernel scope link src 172.18.14.51 metric 100

172.18.14.51 dev eth1 proto static scope link metric 101

192.168.10.0/24 dev eth1 proto kernel scope link src 192.168.10.10 metric 101

ip route add 192.168.11.0/24 via 192.168.10.10 dev eth1

Multiple sites on single host

Add in /etc/httpd/conf/httpd.conf

IncludeOptional sites-enabled/*.conf

mkdir /etc/httpd/sites_availbale

cd /etc/httpd/sites_availbale

cat site1.conf

ServerName site1

ServerAlias site1

DocumentRoot /var/www/site1/

openssl genpkey -algorithm rsa -pkeyopt rsa_keygen_bits:2048 -out logic5.logic1.com.key

[root@server1 certs]# openssl req -new -key logic5.logic1.com.key -out logic5.logic1.com.csr

[root@server1 certs]# openssl x509 -req -days 365 -signkey logic5.logic1.com.key -in logic5.logic1.com.csr -out logic5.logic1.com.crt

-rw-r--r--. 1 root root 1277 May 21 06:15 logic5.logic1.com.crt

-rw-r--r--. 1 root root 1074 May 21 06:10 logic5.logic1.com.csr

-rw-r--r--. 1 root root 1704 May 21 06:02 logic5.logic1.com.key